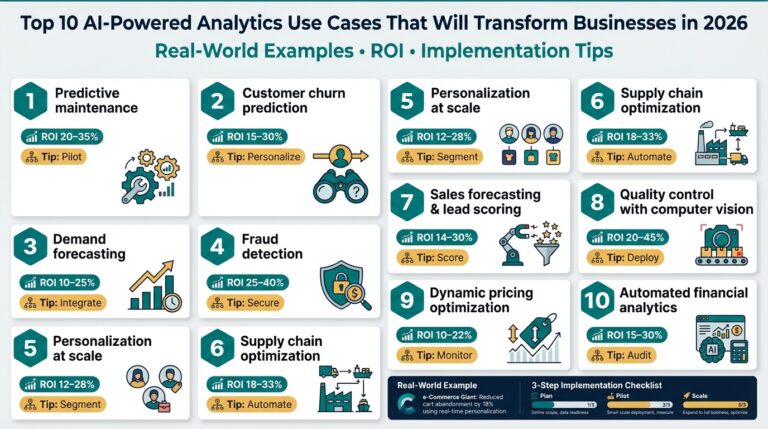

Top 10 AI-Powered Analytics Use Cases That Will Transform Businesses in 2026 — Real-World Examples, ROI, and Implementation Tips

Why AI Analytics Matters If you’ve ever stared at dashboards that show what happened last quarter and wondered how to act now, you’re not alone. AI analytics puts predictive analytics and real-time analytics into your operational flow so you can move from reactive reporting to proactive decision-making. How do you